Editor’s note: The following is a guest article from Arvind Raman, SVP and CISO at BlackBerry.

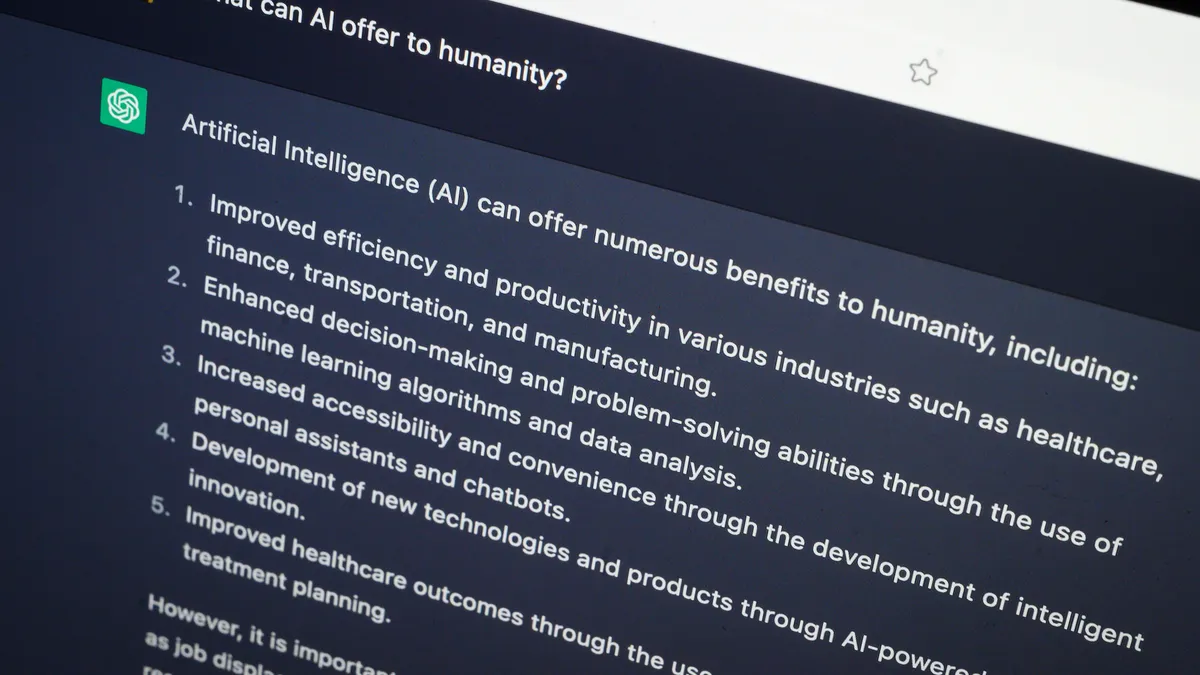

Companies across the world are taking measures to regulate how their employees use OpenAI’s ChatGPT at work. As with all new technologies, generative AI models like ChatGPT can be a source of both benefits and risks.

After researching industry best practices, some organizations are deciding that — at least for now — the risks outweigh the benefits. As a result, they may choose to declare the use of ChatGPT unauthorized, and block access to it from company networks until appropriate support and direction can be established.

Nearly half of human resources professionals said they were formulating guidance on their employees’ use of ChatGPT, according to Gartner. This is no surprise considering the number of people who’ve admitted to using the chatbot at work.

A Fishbowl survey suggests 43% of working professionals have used AI tools like ChatGPT to complete tasks at work. More than two-thirds of respondents hadn’t told their bosses they were doing so.

ChatGPT is the fastest-growing digital platform in history, amassing more than 100 million users in its first two months of availability. For comparison’s sake, WhatsApp had that many users after three-and-a-half years, and Facebook took four-and-a-half years to reach the same milestone.

ChatGPT is also the first widely accessible natural language processing chatbot driven by artificial intelligence. It can interact in human-like conversations and generate content such as emails, books, song lyrics, and application code. There’s a good chance some of your employees are using ChatGPT today.

But is leveraging ChatGPT or similar AI-powered chatbots safe for your organization? And what are the implications for security, privacy, and liability?

Considering the risks

It’s still too early to know all the potential trade-offs associated with this AI tool, but there are some risks from using ChatGPT — and an increasing number of similar tools — that every organization should consider.

If sensitive third-party or internal company information is entered into ChatGPT, that information will become part of the chatbot's data model and can be shared with others who ask relevant questions, resulting in data leakage. Any unauthorized disclosure of confidential information into ChatGPT (or any online source) may violate an organization’s security policies.

If ChatGPT security is compromised, content that an organization may have been contractually or legally required to protect may be leaked and attributable to the organization, which could impact the company's reputation.

ChatGPT is a third-party system that absorbs information into its data. Even if AI bot security isn't compromised, sharing any confidential customer or partner information may violate your agreements with customers and partners, since you are often contractually or legally required to protect this information.

There are also complications when it comes to who owns the code that ChatGPT generates. Terms of service indicate that ChatGPT output is the property of the person or service that provided the input. Complications arise when that output includes legally protected data that was gathered from the input of other sources.

There are copyright concerns if ChatGPT is used to generate written material inspired by copyrighted property, including licensed open-source materials.

For example, if ChatGPT is trained on open-source libraries and “replays” that code when answering a question, and a developer then puts that code into products a company ships, it could place the company in violation of “unfriendly” Open Source Software (OSS) licenses.

Terms of service indicate that ChatGPT can’t be used in the development of any other AI. The use of ChatGPT in this way could jeopardize future AI development if your company is in that space.

Privacy considerations of AI chatbots

ChatGPT currently warns users about providing or inputting sensitive or personal information, such as names or email addresses. However, it's unclear how the creators of this tool comply with international privacy laws or whether appropriate controls are in place to protect personal data and respect individuals’ rights to their data.

Providing any personal data to a generative AI system opens the possibility that the data could be re-used for additional purposes, potentially resulting in misuse and reputational harm.

Using personal data for non-approved purposes may violate the trust of the individuals that provided their information to your organization, and breach the organization’s privacy commitments to employees, customers, and partners.

Organizations should evaluate whether ChatGPT — and the many AI tools that will follow — is safe to use. Embracing leading-edge technologies can certainly enable business, but new platforms should always be evaluated for potential cybersecurity, legal and privacy risk.